Wave analysis would be great to, but to be used as “the robot” when I run out of fingers and feet… It would be sweet if pre-recorded audio effects (panning, filtering, etc.) could manipulate Touch, It’s not ideal, but it would get me up and running.

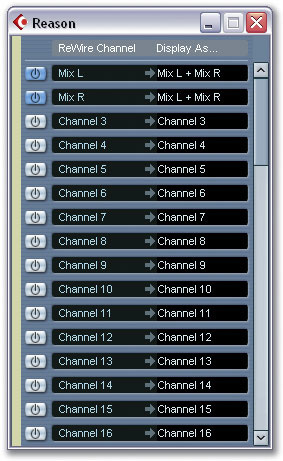

#FIND THE REWIRE VST PLUGIN SOFTWARE#

My next move was to try to find some sort of plug-in or stand alone midi software that would allow me to patch/route/duplicate midi. I’ve tried having Touch generate Midi out, but Live can’t find it. I haven’t been able to get both Touch and Live to read the same midi input. I’m pretty sure I’ll have to build one very big “Omni Synth” that contains all the Touch-synths for the entire performance. b) The same sliders are being manipulated to control animation and audio effects.Ĭ) One midi knob turned to select the proper next song/Touch-synth, and one button to launch the new song and Touch-synth. Live running, switching sections of the song, and Touch is also being switched to different sections (of the same synth) from the same midi hardware buttons. Here’s a speculative performance example.Ī) One computer running everything. These animation controls will then be routed to software audio effects in Live. I’ll start to play around with Touch, and build controls for improv animation that will be done via midi and Joysticks. Once I’ve got the basic structure (moving from song section to section) of my audio performance set up, I’d like to have Touch open and being controlled by Live or Max MSP (again, SAME computer). Or am preparing for an audio performance, When I add audio tracks that are software based (no real-world instruments being recorded at the time), I’d like to have Live and maybe Max MSP running (on the SAME computer) while I record tracks from my real-world gear. I think I may need them both to get what I want. I’ve been playing around a bit with “Live” and “Max MSP” (Demo versions). My audio card is an external “M-Audio Firewire Solo”.ĪSIO and WDM supported, both of which are new to me. I’m just getting started again with audio software after a very long time away. Please forgive any ignorance that I display here, I’ll be writing more after I’ve a done a bit more homework. Thank you Jarrett and Achim for pointing out some areas I need to research. Yeah I’m pretty much in the research stage right now. Or I stick with having to use the mouse to load tracks, which ain’t fun in a live set. So until they change this and or the cycling74/ableton partnership produces any results, the only option is to use liveAPI, which is not really developed anymore. Problem is ableton not supporting track loads via MIDI (and osc). My goal is one day to control ableton live from touch, basically building a (multitouch) virtual midi controller. On the other hand I never really used rewire, so I probably miss the good stuff an integration might offer. It’s a DAW running as a vst plugin, so you can also load additional VSTs/Instruments inside it.

In case you don’t know energy XT, check it out.

Could have the same benefit as implementing rewire, but also giving you much more audio power. If instead VST plugins would be supported, then you could even load something like energyXT to have a complete DAW inside your synth (and maybe send sync data from there to touch). What you wanna do with the audio data? If it’s just for visualization purposes, you might be better of doing analysis in an optimized package (like max/msp, pd, bidule) and only send the “converted” control signals you need via osc to touch. If it works w/o ASIO on both ends, then you can use it to get the audio from any app into touch and send additional control signals via MIDI/osc But I’m not sure if both apps need to support asio. I think the jack audio server is now also available for windows.

0 kommentar(er)

0 kommentar(er)